The Accelerator

Global AI Trends: What's Happening Now

🤖 AI Agents Move from Hype to Reality (Sort Of)

The most-hyped trend of 2025—AI agents that can autonomously complete complex tasks—is hitting the "trough of disillusionment." While tech giants promised digital workers that could handle entire workflows, experiments by Anthropic and Carnegie Mellon found that agents make too many mistakes for businesses to rely on them for processes involving significant money. The challenge? Agents must be accurate at every step of a multi-step process, and current technology isn't quite there yet. Expect 2026 to focus on hybrid approaches: agents handling routine subtasks while humans maintain oversight and make critical decisions.

🌍 World Models: The Next Frontier

As large language models (LLMs) reach "peak data"—having consumed most of the accessible text on the internet—researchers are turning to world models: AI systems that learn by watching videos and building representations of how things move and interact in 3D spaces. Think of it as the difference between predicting the next word and predicting what happens next in the physical world. Yann LeCun, one of AI's "godfathers," left Meta to launch a world model startup reportedly seeking a $5 billion valuation. For robotics, autonomous vehicles, and immersive technologies, 2026 could be the breakthrough year.

📊 Small Models, Big Impact

While headlines focus on ever-larger AI models, the smart money is moving toward smaller, specialized models fine-tuned for specific tasks. AT&T's chief data officer Andy Markus explains: "Fine-tuned small language models will be the big trend and become a staple used by mature AI enterprises in 2026, as the cost and performance advantages will drive usage over out-of-the-box LLMs." For small businesses with limited resources, this trend is particularly important—you don't need a supercomputer to get AI working for you.

AI POLICY WATCH:

The Federal-State Showdown

In a move that will shape AI regulation for years to come, President Trump signed an executive order on December 11, 2025, titled "Ensuring a National Policy Framework for Artificial Intelligence." The order aims to establish federal primacy over AI regulation and specifically targets state laws deemed "onerous" or inconsistent with federal policy.

What's at stake: All 50 states introduced AI-related legislation between January 2023 and October 2025, totaling 385 bills addressing individual privacy protections, transparency requirements, and systemic governance. Several significant state laws took effect January 1, 2026, including:

California's Companion Chatbot Act (SB 243): Requires safety protocols to prevent content related to suicidal ideation or self-harm, with special protections for minors

Texas Responsible AI Governance Act: Establishes broad governance requirements and mandates disclosure when AI is used in healthcare diagnosis or treatment

Colorado AI Act: Requires developers of high-risk AI systems to protect against algorithmic discrimination (though the White House specifically calls this out as problematic)

The federal enforcement mechanism: The executive order doesn't just express disagreement with state laws—it establishes concrete mechanisms to challenge them. Within 30 days of the December 11 order, the Attorney General was directed to establish an AI Litigation Task Force specifically to identify and challenge state AI laws. Colorado's AI Act, which addresses algorithmic discrimination in high-risk systems, is among the primary targets. The order also conditions federal funding on states not enacting conflicting AI laws and requires the Commerce Secretary to identify "burdensome" state regulations within 90 days.

What does this mean for communities: The regulatory landscape just got more uncertain—and more combative. The AI Litigation Task Force signals that this isn't a negotiation but a legal battle. While the executive order doesn't immediately invalidate any state law, legal challenges could be filed within weeks. For local businesses and organizations, this uncertainty underscores the importance of understanding AI fundamentals—regardless of which level of government ultimately sets the rules, informed stakeholders will be better positioned to adapt and advocate for their interests. When federal and state governments are fighting over who regulates AI, communities that understand the technology can participate in the debate rather than simply waiting to see who wins.

CED Strategies That Work

Public-Private Partnerships Gain Momentum

The Federal Reserve's 2026 National Community Investment Conference (March, Phoenix) will focus on "Innovations in Public-Private Partnership" as the key strategy for advancing economic opportunity. Community development professionals are finding that collaboration between government agencies and private sector companies leverages the strengths of both sectors. Recent successful models include infrastructure development combining municipal planning with private investment, workforce training programs linking community colleges with local employers, and small business incubators supported by both public grants and corporate sponsors.

The Multiplier Effect of Local Investment

Research confirms that investments made within communities generate a multiplier effect, circulating locally and stimulating further economic activity. Community Development Corporations (CDCs) are demonstrating this principle through affordable housing initiatives, small business development, and workforce training programs that ensure economic opportunities remain accessible to all residents. The key insight: money spent locally has greater long-term impact than outside investment that extracts resources without building community capacity.

SIDEBAR:

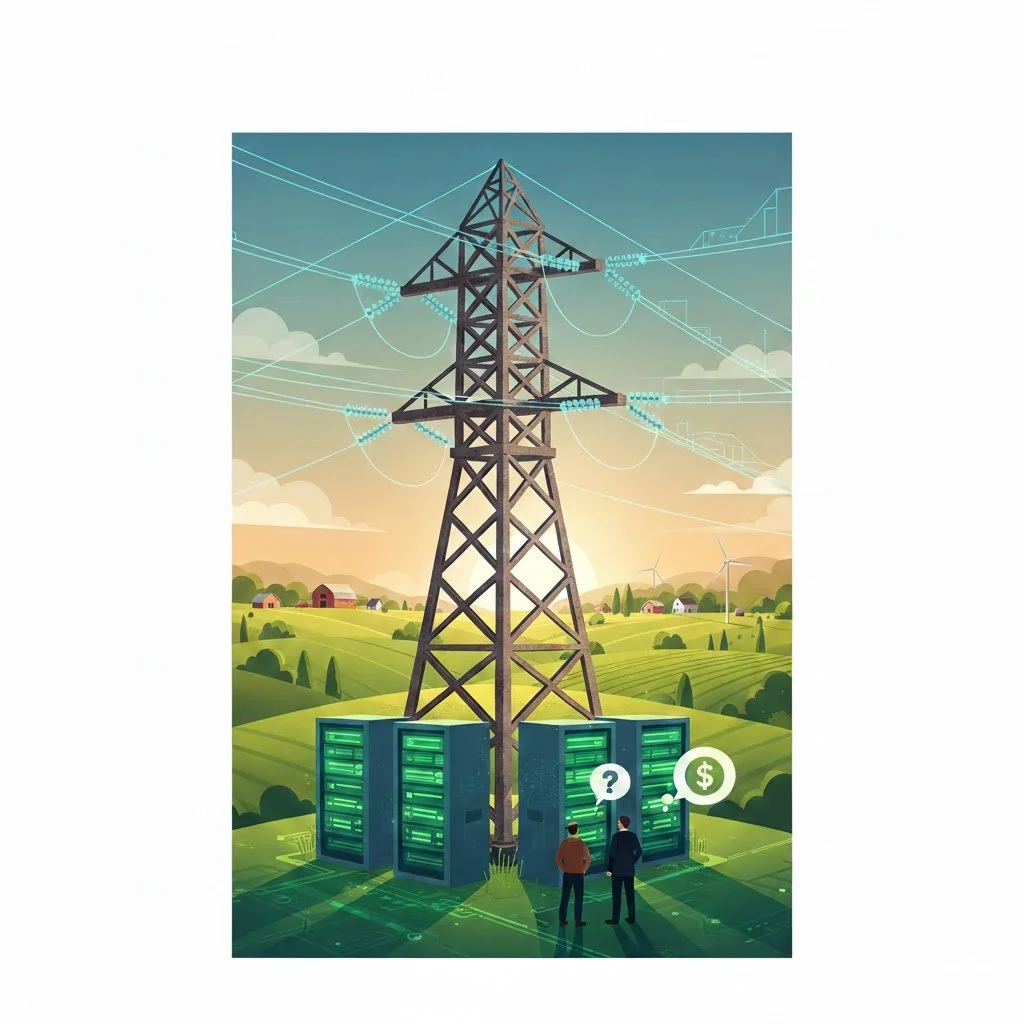

The New Bottleneck—

Power, Not Processing

Why the electricity crisis matters for rural communities

For years, the AI industry's biggest constraint was silicon—not enough chips to meet demand. That bottleneck is easing, but 2026 has revealed a new limiting factor: electricity.

Training and running large AI models require enormous amounts of power. Data centers housing AI systems consume electricity at unprecedented rates, and the grid can't keep up. According to recent industry analyses, the shift from chip shortage to power shortage is fundamentally changing where AI infrastructure gets built.

What this means for rural America: Communities with available land and power capacity—exactly the profile of many rural areas—are suddenly attractive locations for data center development. Tucker County, West Virginia's surprise data center proposal is just the beginning. Expect more rural communities to receive similar proposals in 2026.

The double-edged sword: On one hand, data centers bring jobs, tax revenue, and infrastructure investment. On the other hand, they consume massive amounts of electricity (potentially straining local grids), require minimal local workforce once built, and often extract value without building community capacity.

The AI literacy connection: Communities facing data center proposals need to understand:

What these facilities actually do and why power availability matters

How to negotiate for community benefits beyond basic tax revenue

Whether local workforce development programs can capture long-term employment opportunities

How power consumption affects electricity costs for existing residents and businesses

What role can the community play in ensuring AI infrastructure serves local interests

The power bottleneck is making rural geography valuable again. The question is whether rural communities will have the knowledge and capacity to ensure that value flows back to them—or whether they'll simply become the landscape where others build wealth.

Bottom line: When a data center proposal arrives at your community's doorstep (and it likely will), AI literacy is the difference between informed negotiation and rushed decision-making. The power shortage makes this conversation urgent for Southeast Ohio and similar regions.